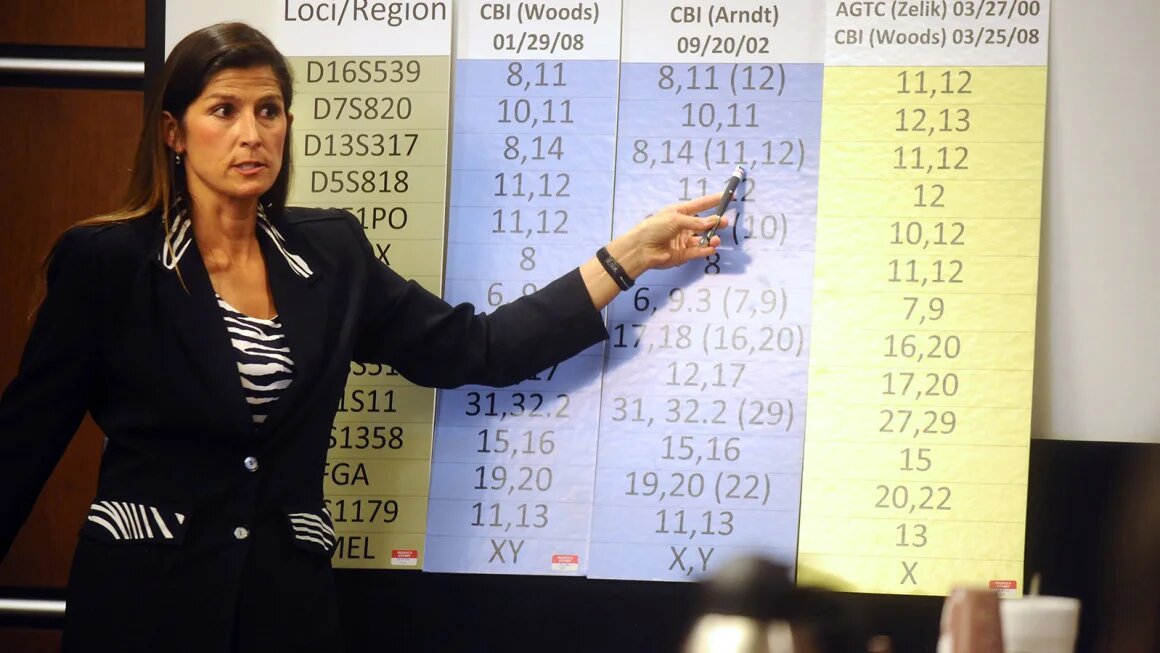

The recent revelation surrounding the conduct of former forensic scientist Yvonne “Missy” Woods at the Colorado Bureau of Investigation (CBI) has sent shockwaves through the legal community. An extensive internal affairs investigation uncovered a troubling pattern of data manipulation and omission spanning nearly three decades, casting a shadow of doubt over the integrity of forensic […]

New Beneficial Ownership Database: Implications for Privacy and Law Enforcement

The U.S. Treasury Department is set to launch a comprehensive database of corporate ownership information, but its access rules are raising concerns about privacy and potential misuse by law enforcement. The initiative, driven by the Corporate Transparency Act, aims to curb money laundering and the use of anonymous shell companies for illegal activities. However, there […]

Google’s Move to Limit Geofence Warrants: A Victory for Privacy and Criminal Defense

Introduction: In recent news, Google has taken a significant step to address a longstanding concern for privacy advocates and criminal defense attorneys alike—the use of geofence warrants. These warrants, also known as reverse-location searches, have raised serious questions about individual privacy and the implications for those caught in the wide net of surveillance. In this […]

The Dark Side of NFTs: Understanding How They’re Used for Money Laundering

Introduction Non-fungible tokens (NFTs) have been making headlines as digital art and collectibles continue to gain popularity. However, alongside their legitimate uses, NFTs have also attracted the attention of criminals seeking new methods for money laundering. In this blog post, we’ll delve into the details of how NFTs are being exploited for money laundering and […]

The Forensic Investigation of NFTs in Money Laundering: A Law Enforcement Perspective

Introduction As the popularity of non-fungible tokens (NFTs) continues to grow, so too does their potential for being exploited in money laundering schemes. Consequently, law enforcement agencies are working on developing methods to forensically investigate NFTs involved in illicit activities. In this section of the blog post, we will explore some of the techniques and […]

In-Depth Look at Chainalysis, Elliptic, and CipherTrace: Forensic Science in Blockchain Analysis

Introduction The rapid growth of cryptocurrencies and blockchain technology has made it increasingly important for law enforcement and financial institutions to understand and monitor blockchain transactions. Chainalysis, Elliptic, and CipherTrace are three leading companies in the field of blockchain forensics, providing tools and services that enable the analysis of cryptocurrency transactions for potential illegal activities. […]

Juice Jacking: Understanding the Risks, Forensic Science, and How Unwitting Victims Can Face Investigations

Introduction In today’s technology-driven world, we often rely on public charging stations to keep our devices powered up when on-the-go. However, these convenient charging spots can sometimes become a hotbed for cybercriminal activity, leading to a phenomenon known as “juice jacking.” This blog post aims to provide a comprehensive and technical understanding of juice jacking, […]

AirTags and Stalking: A Deep Dive into the Forensic Science Behind Apple’s Tracking Device

Introduction AirTags, Apple’s compact and user-friendly tracking devices, have taken the world by storm since their release. Designed to help users locate misplaced items, AirTags use Bluetooth and the vast Find My network to pinpoint the location of lost belongings. However, their tracking capabilities have also sparked concerns about their potential misuse for stalking purposes. […]

Performance Enhancing Drug Detection in Sports: Analytical Techniques and substances detected

Throughout the history of drug testing in sports, various analytical techniques have been used to detect an ever-evolving range of performance-enhancing substances. Some of the key techniques and substances tested for include: Gas Chromatography-Mass Spectrometry (GC-MS): GC-MS is a widely used analytical technique for detecting a range of substances, including anabolic steroids, stimulants, and masking […]

Gene Doping in Sports: Methods and Detection Issues

Gene doping is a form of genetic manipulation that involves altering an individual’s DNA or using gene therapy techniques to enhance athletic performance. It represents a significant challenge for anti-doping authorities, as it is difficult to detect and can potentially provide substantial performance advantages. Gene doping works by modifying or introducing specific genes into an […]

The history of Performance Enhancing Drugs in Sports: Detection and Introduction to Detection Methods

The history of testing for performance-enhancing drugs in international sports is a complex and evolving story. It has been shaped by the development of new scientific techniques, the establishment of international organizations, and an ongoing battle against doping. This overview will outline the key milestones and scientific methods in the history of anti-doping efforts. 1960s: […]